Recap

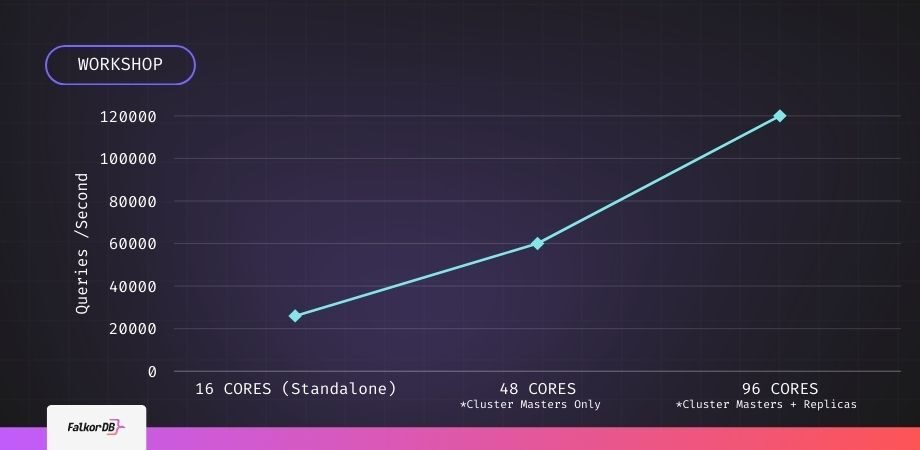

FalcorDB’s team assessed how a multi-tenant graph database handles an access-permission workload and whether throughput scales when moving from a single server to a clustered deployment. The workshop used a real-world pattern—“Does user X have permission to file Y?”—and measured query-per-second (QPS) under three hardware configurations.

| Test setup | Cores | Graph layout | QPS (mean) | Scaling reasoning |

|---|---|---|---|---|

| Single instance | 16 | 629 isolated graphs on one node | ≈ 25 k | Baseline |

| 3-master cluster | 48 | Graphs distributed by key-space slots across three masters | ≈ 60 k | Deductive: tripling compute raised throughput ~2.4×, close to linear |

| 3 masters + 3 replicas | 96 | Masters handle writes; replicas added as read targets | ≈ 120 k | Inductive: doubling compute again doubled read throughput, sustaining linear trend |

Key Observations

Linear throughput growth. Each 32-core increment raised capacity roughly in proportion to added compute, indicating minimal coordination overhead across shards.

Graph isolation at query time. Every query targets a single graph key, removing the need for second-label filters and reducing risk of data leakage.

Replica lag is negligible for reads. The team noted only a brief propagation delay from master to replica; write integrity remains master-bound.

Workshop Q&A

Can I add more replicas to achieve more operations per second?

Is the multigraph functionality available in the open-source version of FalkorDB?

Is there full isolation between masters and replicas?

Are multi-tenant graphs a good mechanism for sharding?

Can I run a query distributed between multiple graphs and return one result set?

Is there any overhead when adding more graphs?

Build fast and accurate GenAI apps with GraphRAG SDK at scale

FalkorDB offers an accurate, multi-tenant RAG solution based on our low-latency, scalable graph database technology. It’s ideal for highly technical teams that handle complex, interconnected data in real-time, resulting in fewer hallucinations and more accurate responses from LLMs.