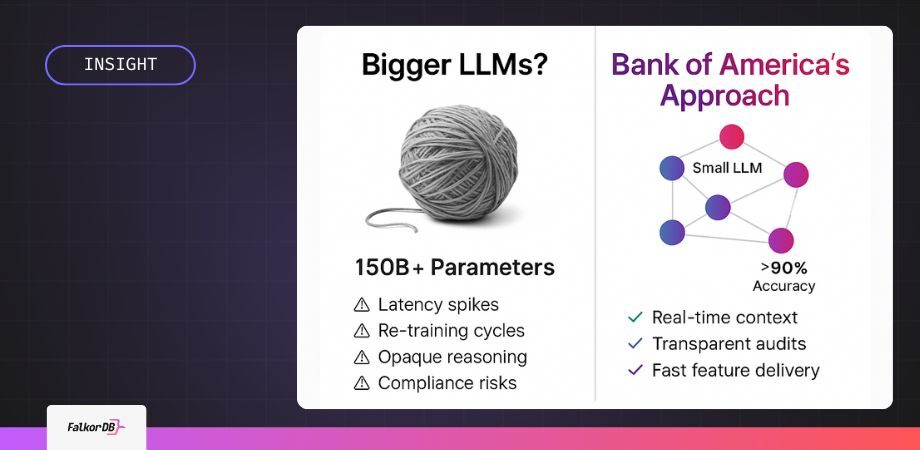

- Small LLMs outperform large models in focused, enterprise AI workflows.

- GraphRAG reduces latency and ensures transparent, auditable AI retrieval.

- In-house AI paired with graph databases accelerates feature delivery.

Bank of America’s $4 billion AI investment signals ambition, but its most effective AI solution isn’t a massive LLM—it’s Erica, a lightweight agent delivering measurable ROI through precision, speed, and adaptability. This isn’t about chasing model size. It’s about smart architecture.

Small, Task-Specific Models Outperform Jumbo LLMs in Enterprise Workflows

Enterprise workflows demand accuracy, low latency, and compliance—not creative text generation. Bank of America understood this in 2018 when it launched Erica, a task-specific AI agent designed for focused interactions.

“We are not writing essays with Erica… We’re trying to understand short bursts of information a customer asks us,” said Hari Gopalkrishnan, Head of Consumer, Business, and Wealth Management Technology at Bank of America [1].

Erica has handled over 2 billion interactions, supporting 42 million users, while maintaining accuracy north of 90% by continuously tuning on real customer feedback [2]. This model’s success highlights why small language models (SLMs) are better suited for domain-specific tasks than general-purpose LLMs bloated with irrelevant parameters.

Why Graph-Based Retrieval Keeps AI Agents Accurate and Fast

Generative AI in production fails when context delivery is slow or imprecise. Graph Retrieval-Augmented Generation (GraphRAG) solves this by treating relationships as first-class data. Instead of relying on vector similarity over unstructured embeddings, GraphRAG systems deliver structured, explicit context.

In our experience, a query can move from customer → account → product → rule in one pattern match, delivering the model an explicit, current context instead of a fuzzy approximation.

Graph-based retrieval reduces query latency by up to 70% compared to relational joins in enterprise environments [3]. This architecture keeps AI agents like Erica responsive, predictable, and compliant.

Key Challenges in Scaling AI Agents Like Erica

While Erica is a success story, scaling similar agents exposes recurring architectural risks:

| Challenge | Why It Matters |

| Domain Overfitting | New products or regulations can degrade accuracy below acceptable thresholds. |

| Stateless Interaction Loop | Context loss inflates latency and forces repetitive prompts. |

| Opaque Reasoning | Fails traceability requirements under frameworks like Basel III. |

| Feature Shipping by Fine-Tune | Every new feature burns GPU hours and extends QA cycles. |

| Monolithic Data Stack | In-house systems limit cross-channel insights and fintech collaboration. |

How GraphRAG Addresses These Enterprise AI Pain Points

GraphRAG introduces a retrieval layer that maintains state, provides transparent reasoning paths, and decouples feature deployment from costly retraining cycles.

- Stateful Context: Graph structures store ongoing sessions, eliminating re-prompting delays.

- Transparent Provenance: Every AI response can trace its context through graph relationships—critical for audits.

- Rapid Feature Deployment: New policies or workflows are integrated by adding nodes and edges, avoiding full model retrains.

Example: Customer Context Retrieval with FalkorDB

A GraphRAG query delivering real-time customer context:

GRAPH.QUERY bank_graph "

MATCH (c:Customer {id: 123})-[:OWNS]->(a:Account)-[:LINKED_TO]->(p:Product)

RETURN c.name, a.balance, p.type

"

This single query gives an AI agent immediate, structured context—no joins, no embedding guesswork.

For integration into LLM pipelines, frameworks like LangChain support connecting GraphRAG systems to prompt templates and agent workflows [4].

In-House AI Development vs. Vendor Solutions—Striking the Right Balance

Bank of America’s decision to build Erica in-house delivered a tailored UX. But replicating this approach without leveraging proven graph-based infrastructure can trap teams in cycles of redundant engineering.

“Building an entire retrieval layer from scratch repeats solved data-lineage problems,” as FalkorDB emphasizes in production deployments.

Best practice: Combine in-house domain expertise with graph-native retrieval systems to accelerate delivery, maintain compliance, and avoid architectural dead-ends.

Next steps

Enterprise AI isn’t a contest of parameter counts. It’s about aligning lightweight, task-specific models with retrieval architectures that guarantee precision, speed, and transparency.

Next Step:

Review FalkorDB’s GraphRAG integration examples to optimize your AI agent workflows and reduce latency without sacrificing compliance.

Why did Bank of America choose a small LLM for Erica?

How does GraphRAG improve AI agent performance?

Can GraphRAG help with AI compliance requirements?

Build fast and accurate GenAI apps with GraphRAG SDK at scale

FalkorDB offers an accurate, multi-tenant RAG solution based on our low-latency, scalable graph database technology. It’s ideal for highly technical teams that handle complex, interconnected data in real-time, resulting in fewer hallucinations and more accurate responses from LLMs.