If you are looking for a simple way to create and query a knowledge graph based on your internal documents, you should check out LlamaIndex. LlamaIndex is a tool that allows you to easily build and search a knowledge graph using natural language queries.

In this blog post, I will show you in a simple 6 steps how to use LlamaIndex to create and explore a knowledge graph based on FalkorDB.

1. Installing LlamaIndex

First, you need to install LlamaIndex on your machine. You can download it from the official website or use the command line:

> pip install llama-index

2. Starting FalkorDB server locally

Staring a local FalkorDB is as simple as running a local docker you can go read on the documentation other ways to run it

> docker run -p 6379:6379 -it –rm falkordb/falkordb:latest

6:C 26 Aug 2023 08:36:26.297 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

6:C 26 Aug 2023 08:36:26.297 # Redis version=7.0.12, bits=64, commit=00000000, modified=0, pid=6, just started

…

…

6:M 26 Aug 2023 08:36:26.322 * <graph> Starting up FalkorDB version 99.99.99.

6:M 26 Aug 2023 08:36:26.324 * <graph> Thread pool created, using 8 threads.

6:M 26 Aug 2023 08:36:26.324 * <graph> Maximum number of OpenMP threads set to 8

6:M 26 Aug 2023 08:36:26.324 * <graph> Query backlog size: 1000

6:M 26 Aug 2023 08:36:26.324 * Module ‘graph’ loaded from /FalkorDB/bin/linux-x64-release/src/falkordb.so

6:M 26 Aug 2023 08:36:26.324 * Ready to accept connections

The rest of this blog will cover the simple steps you can take to get started, you can find the notebook as part of the LlamaIndex repository: FalkorDBGraphDemo.ipynb

3. Set your OpenAI key

Get you OpenAI key from the https://platform.openai.com/account/api-keys and set in the code bellow

import os

os.environ[“OPENAI_API_KEY”] = “API_KEY_HERE”

4. Connecting to FalkorDB with FalkorDBGraphStore

Notice you might need to install the redis pytong client if it’s missing

> pip install redis

from llama_index.graph_stores import FalkorDBGraphStore

graph_store = FalkorDBGraphStore(“redis://localhost:6379”, decode_responses=True)

… INFO:numexpr.utils:NumExpr defaulting to 8 threads.

5. Building the Knowledge Graph

Next, we’ll load some sample data using SimpleDirectoryReader

from llama_index import (

SimpleDirectoryReader,

ServiceContext,

KnowledgeGraphIndex,

)

from llama_index.llms import OpenAI

from IPython.display import Markdown, display

# loading some local document

documents = SimpleDirectoryReader(

“../../../../examples/paul_graham_essay/data”

).load_data()

Now all that is left to do is let LlamaIndex utilize the LLM to generate the Knowledge Graph

from llama_index.storage.storage_context import StorageContext

# define LLM

llm = OpenAI(temperature=0, model=“gpt-3.5-turbo”)

service_context = ServiceContext.from_defaults(llm=llm, chunk_size=512)

storage_context = StorageContext.from_defaults(graph_store=graph_store)

# NOTE: can take a while!

index = KnowledgeGraphIndex.from_documents(

documents,

max_triplets_per_chunk=2,

storage_context=storage_context,

service_context=service_context,

)

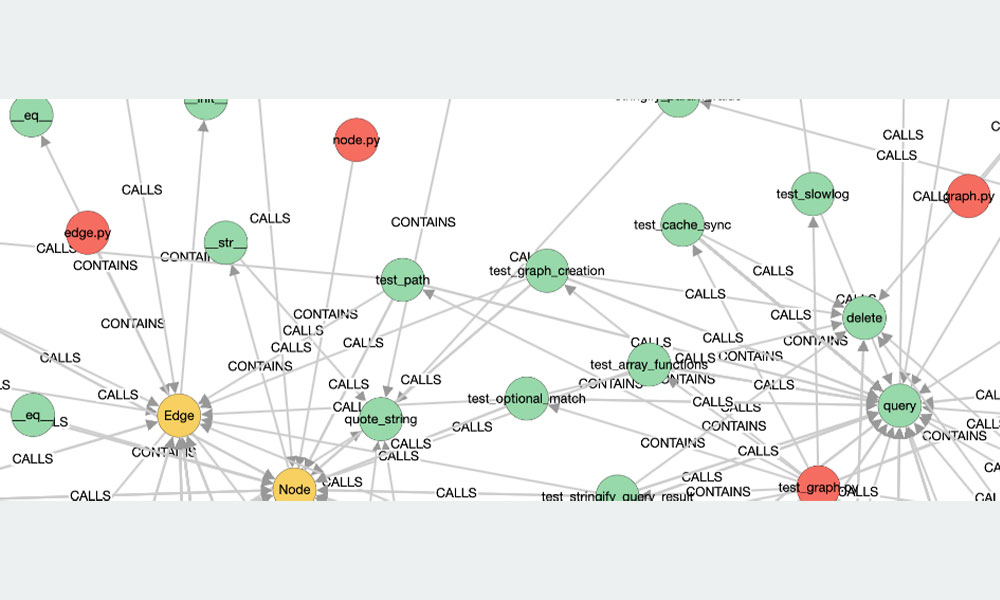

If you would like to learn more about how the Knowledge Graph is built behind the scenes you can run MONITOR command in advance and watch the Cypher commands flowing in.

> redis-cli monitor

1693040028.458515 [0 172.17.0.1:39848] “GRAPH.QUERY” “falkor” “CYPHER subj=\”we\” obj=\”way to scale startup funding\” \n MERGE (n1:`Entity` {id:$subj})\n MERGE (n2:`Entity` {id:$obj})\n MERGE (n1)-[:`STUMBLED_UPON`]->(n2)\n ” “–compact”

1693040028.459960 [0 172.17.0.1:39848] “GRAPH.QUERY” “falkor” “CYPHER subj=\”startups\” obj=\”isolation\” \n MERGE (n1:`Entity` {id:$subj})\n MERGE (n2:`Entity` {id:$obj})\n MERGE (n1)-[:`FACED`]->(n2)\n ” “–compact”

1693040029.434506 [0 172.17.0.1:39848] “GRAPH.QUERY” “falkor” “CYPHER subj=\”startups\” obj=\”initial set of customers\” \n MERGE (n1:`Entity` {id:$subj})\n MERGE (n2:`Entity` {id:$obj})\n MERGE (n1)-[:`GET`]->(n2)\n ” “–compact”

6. Querying the Knowledge Graph

Now you can easily query the Knowledge Graph using free speech e.g.

query_engine = index.as_query_engine(include_text=False, response_mode="tree_summarize")

response = query_engine.query(

"Tell me more about Interleaf",

)

display(Markdown(f"<b>{response}</b>"))

... Interleaf is a software company that was founded in 1981. It specialized in developing and selling desktop publishing software.

The company's flagship product was called Interleaf, which was a powerful tool for creating and publishing complex documents.

Interleaf's software was widely used in industries such as aerospace, defense, and government, where there was a need for creating technical documentation and manuals.

The company was acquired by BroadVision in 2000.

Once again If you would like to learn more about how the Knowledge Graph is queried behind the scenes you can run MONITOR command in advance and watch the Cypher commands flowing in.

> redis-cli monitor

1693040357.757774 [0 172.17.0.1:39848] "GRAPH.QUERY" "falkor" "CYPHER subjs=[\"Interleaf\"] \n MATCH (n1:Entity)\n WHERE n1.id IN $subjs\n WITH n1\n MATCH p=(n1)-[e*1..2]->(z)\n RETURN p\n " "--compact"