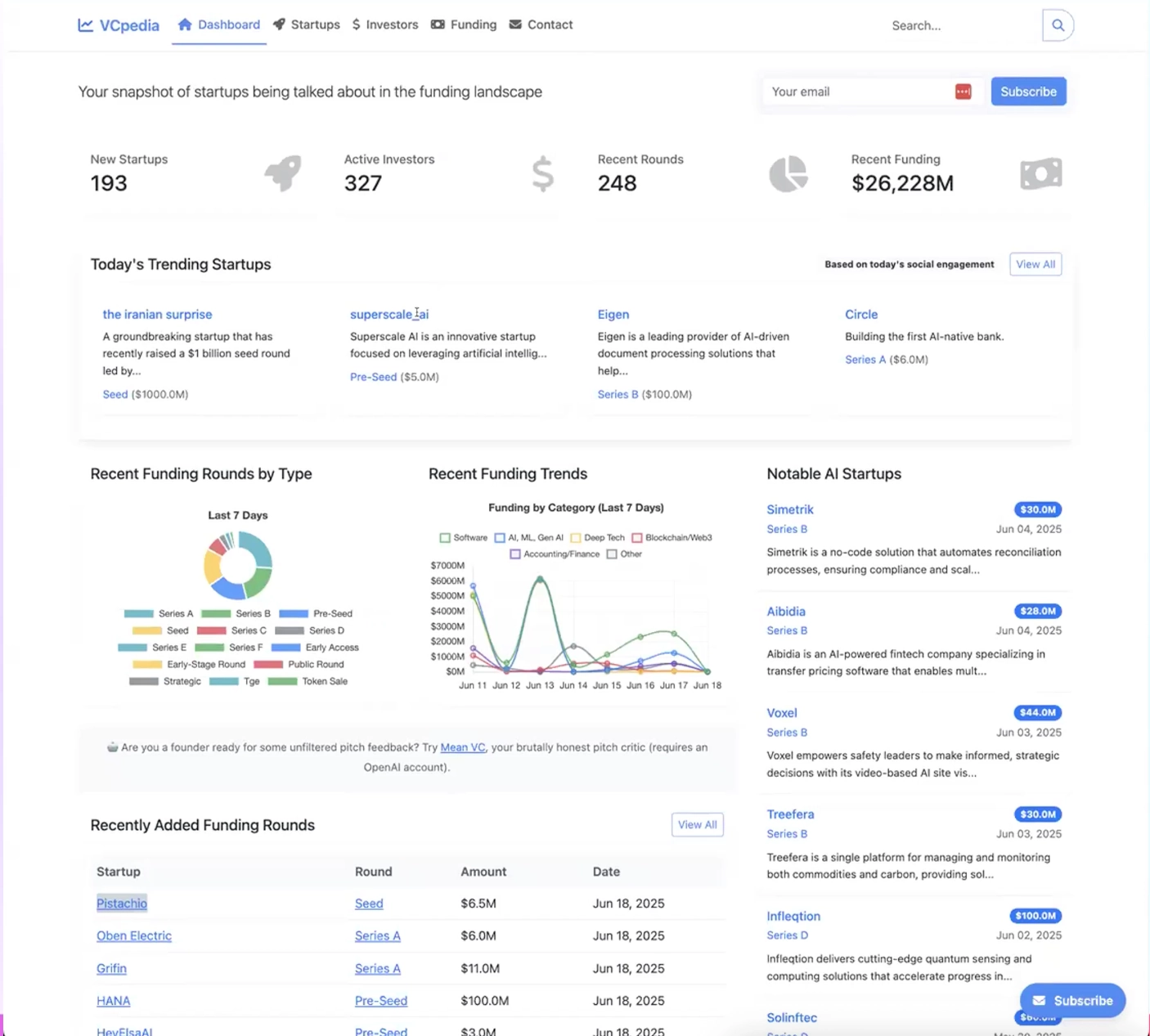

Knowledge graphs represent complex relationships as nodes and edges. This workshop demonstrated practical implementations through two systems: VCPedia for venture capital data extraction and Fractal KG for self-organizing graph structures. The following analysis examines technical decisions, implementation patterns, and production considerations based on firsthand development experience.

Insights

| Insight Category | Technical Details |

|---|---|

| Graph Construction Automation | LLMs enable automated entity extraction and relationship mapping from unstructured data, reducing manual effort in knowledge graph construction |

| Structured Output Methodology | Converting ontology definitions into structured output formats for LLMs ensures consistent data extraction and maintains schema integrity |

| Entity Resolution Strategy | Deduplication represents the primary challenge in large-scale knowledge graphs; approaches include deterministic matching and LLM-based similarity assessment |

| Traversal Efficiency | Graph-based data retrieval through edge traversal provides superior context gathering compared to multi-table relational database joins |

| Ontology-Driven Accuracy | Explicit ontology definition constrains LLM interactions with graphs, improving query accuracy by providing clear entity and relationship type boundaries |

| Memory Optimization | String interning for frequently repeated values (e.g., country names) prevents redundant storage across millions of nodes |

| Schema Flexibility | Property graph model allows schema evolution while maintaining existing data, supporting iterative development approaches |

System Architecture: VCPedia Case Study

Q&A

What criteria determine whether information should be modeled as a node versus an attribute?

What granularity of document storage should be implemented in FalkorDB for RAG systems?

What automated ontology enforcement capabilities exist in schemaless knowledge graph systems?

Should multiple domains be consolidated into a single knowledge graph or separated into domain-specific graphs?

How should ontologies scale with data updates?

How can attribute extraction accuracy be improved for strict ontology schemas?

Discussion Transcript

Question 1: Node vs Attribute Design

Question: “What rule or heuristic should one use to determine whether some information should be a node versus an attribute of a node? An individual might be a node in a graph and their name would likely be an attribute, but what about their country? I can see a case for that being either a node or an attribute. Perhaps this is where upfront definition comes into play.”

Roy’s Answer: “It’s a good question, and I think it’s a question that many have yet to ask themselves whenever they’re trying to model their data. There isn’t a clear and correct answer—for some, treating a piece of information as an attribute might be the right way to go; for others, it might make sense to treat this piece of information as a node. My general way of thinking about this is: if you’re trying to visualize data as a graph, whatever comes to mind naturally is the data model that you probably want to start experimenting with. Also, if the data point we’re thinking of seems trivial, like at the beginning of the question, then there’s not much to think about.

Let’s try and think about the country piece of information. A person might be a resident or have a certain nationality, and so you might represent the country as an attribute for every person in your graph. For example, if you’re just maintaining the string of that person, then one thing that comes to mind is memory consumption. If you have millions of different persons, then this small set of strings representing countries would duplicate itself over and over again, so memory might come to mind. Actually, we’ve addressed this in our latest release—we have this feature called string interning where you can specify, ‘Hey, this is a string which comes pretty often; don’t duplicate it, use a unique value of it,’ and everything is shared. So this is one thing to take into consideration.

Another thing is the way in which you’re going about traversing or searching for knowledge. You need to think about the different types of questions that your system is intended to answer. For example, if you want to do traversal from a country outward, it would make sense to represent the country as a node. But if you are more focused on a person—you’re trying to locate a person via their unique identifier or social security number and you just want to see, for example, in which state that person lives—then it might make sense to have this as an attribute. You can still go with a node, but then you would have to traverse from that person to a country node and retrieve the name of the country.

To summarize: go with the most natural way of thinking about the data as a graph and see if the data model that you have chosen fits the different types of queries and questions that you’re intending to answer.”

Yohi’s Answer: “I would say the same thing, especially the last thing you said. For me, I’m not as deep on understanding how to optimize the queries piece, but I think starting from the queries is important. If you’re going to constantly filter your data by country, I would guess that it would be intuitive to turn that into a node. But if it’s something you just want to see when you visit their page as a side note, then you might not need to add that as a node. That’s kind of how I would think about it.”

Question 2: Document Storage Detail

Question: “When working with documents, what level of detail are we saving in FalkorDB? Would you store sentences, paragraphs, or summaries? If only storing summaries, are you also storing entire documents in a separate database?”

Roy’s Answer: “This is a classic RAG question, I would say, and once again there’s no right answer. I think all options are viable, and it really depends on the type of questions and the level of accuracy that you are aiming for. We’ve seen all different options—some people are actually analyzing the documents, for example with LLMs, creating embeddings, extracting sentences, creating summaries, and all of the relationships between the different components: paragraphs, sentences, summaries, entire documents. These can be represented as nodes in a graph.

For example, whenever you’re trying to answer a question via the classical RAG approach where you are creating embeddings for the question and then you’re doing semantic search to get to maybe the summary or maybe to get to the relevant paragraphs, then you can utilize the graph in order to get a hold of the original document. Maybe there’s a hierarchy of documents saying, ‘Oh, this document is a page from a broader corpus,’ or ‘Oh, this is a Wikipedia article that is linked to other Wikipedia pages. Maybe I want to extend my context and get some extra data by traversing the graph.’

So there’s no clear answer. I think you should experiment with it, but the benefits of storing this information in a graph allow you to retrieve additional context, which usually is relevant to extending the context with information that would help your LLM generate the correct answer.”

Question 3: Ontology Enforcement

Question: “If I understand correctly from the earlier slides, despite the knowledge graph system being schemaless, developing an ontology is a useful exercise to help guide the development and maintenance of the model that the system develops. Are there any useful mechanisms for enforcing that ontology within the knowledge graph system automatically?”

Roy’s Answer: “There are some mechanisms, yes, but the overall model that we’re following is known as the property graph model, which in its core is schemaless. The way in which you can start enforcing the schema—the feature that FalkorDB offers—is with constraints. Currently, we have two different constraints: one is unique constraints, and the other one is exists constraints.

The unique constraint makes sure that an attribute is unique throughout an entire entity type. For example, a social security number should be unique for every person. In addition, the exists constraint makes sure that every entity of a certain type—let’s say a country—must have a population attribute. It doesn’t have to be unique, but it must exist. That’s what we currently offer.

It would not enforce, for example, introducing new labels or new relationship types that are not part of the ontology. It would not enforce the type of edges coming in or going out from a certain node type. So for example, if your ontology says that there should not be an edge of type ‘driven to,’ that would not be enforced.

I think that over time, the plan is to introduce schema enforcement mechanisms and features into FalkorDB, but at the moment, the responsibility is for the user to enforce the ontology to make sure that the underlying graph follows the ontology. That could be done either via code that has been written by the user or by guiding an LLM to strictly follow the ontology whenever doing updates, deletions, or ingesting.”

Question 4: Graph Embedding Models

Question: “What graph embedding models are supported, and do they specify node, edge, or graph embeddings?”

Roy’s Answer: “That’s easy—none. We do not have GNN capabilities or graph embeddings. The only type of embeddings that we’re supporting at the moment are vector embeddings, the ones which you can generate with different LLMs or AI vendors. Once you have those vector embeddings, then you can utilize our semantic index to do vector searches. But I know that there’s interest in GNNs to do both node label prediction, edge prediction, and subgraph classification. Unfortunately, that’s not supported at the moment.”

Question 5: Domain Separation

Question: “Should I use a knowledge graph per domain or a single knowledge graph containing all the domain data?”

Roy’s Answer: “I think that it really depends on the use case, but let me say this: if you do see value in storing all of your information, which might span across multiple domains, in a single graph, you can do that. The nice thing about this is that you can have multiple ontologies all describing different portions of your graph. So you would have one ontology, for example, defining one domain which might be around driving sessions, but your graph expands further—it doesn’t contain only driving sessions with roads and intersections. It might contain other information that is still related to that, let’s say cities and population and universities, whatever have you. But then you can still maintain two different ontologies: one which is focusing on the driving sessions and the other one which focuses on, let’s say, population and employment. But those two all coexist within a single graph.

It is the LLM and the ontology that is represented to it that would know how to filter or focus on the relevant pieces of information within this larger graph. The other option is—if you don’t see value in connecting everything together—another very cool feature of FalkorDB that allows you to maintain multiple graphs in a single database. So similar to SQL where you have multiple tables within a single database, here you can have multiple disjoint graphs all within a single database. So you can keep them separated, you can join them together, you can have one ontology per graph, or you can have multiple ontologies capturing data from subgraphs within a single one.”

Question 6: Multiple Languages

Question: “If VCPedia supported multiple languages, would you add a translation step or store the tweets in their original language?”

Yohi’s Answer: “I would probably store the tweets in the original language and simply translate the output of the site, but that’s just my gut reaction. I don’t really have a strong reason for it.”

Question 7: Ontology Scaling

Question: “How does an ontology scale when my data updates?”

Roy’s Answer: “It really depends on the update. I mean, you can think—for this particular question, you can think about two different types of updates. One: updates that don’t change the ontology, meaning that you’re just adding a new city or a new university or a new person into your graph. That doesn’t change the ontology; it remains the same. The other type of update is if you are adding a new type of entity. So for example, maybe you are adding a truck to your driving session which only had cars in it, but now there is a truck.

We do not enforce alignment between the underlying graph and the ontology. This is something that the user needs to maintain, just like the way in which we’re not enforcing schema. Whenever you’re introducing a new type of entity, you should extend your ontology to capture that. So with this truck example, you should add a new entity to your ontology describing what the truck looks like, meaning the attributes associated with it and the different types of relationships that are interacting with this new truck entity.”

Question 8: Attribute Extraction Improvement

Question: “How can I improve the attribute extraction for each entity from my strict ontology schema for my knowledge graph? For example, I would get non-relevant context assigned to an entity or incomplete extraction. I hypothesize my chunking is wrong or I need to employ more natural language processing steps.”

Yohi’s Answer: “It’s hard to say. I feel like with graphs, it’s hard to solve a problem without knowing the specifics because it is really case-by-case in terms of what data you’re working with. But a few things that popped to mind that have worked for me in different cases:

Few-shot is always helpful—giving a couple of examples. If you’re converting large documents and you need to chunk them, again this is a simple example, but sometimes you need higher-level context of the document for each chunk. A simple example might be: the book starts by using people’s names, but later on it uses ‘he’ or ‘she’ instead. If you grab that paragraph and try to convert it, the ‘he’—the name might not be matching. So you might need to have higher-level context that you feed into the chunk if you’re chunking and then extracting.

And then just—I think a lot of—I found that adding clear descriptions when you use structured output for each parameter, you can provide descriptions, you can provide hard-coded rules on options, and using the structured output, defining JSON, and improving the prompt there, I found is also helpful. So those are just some techniques that popped into my mind.”