When you surf through Amazon Prime, you are met with a screen that lists the top movies for you. Netflix and Hulu do the same. These platforms use powerful recommendation systems to keep you interested — by tracking your browsing history, your recently watched shows, the movies you have rated, the languages in which you watch films, and more.

A Movie Recommendation System is a fascinating blend of technology, psychology, and art. They are now an integral part of our movie-watching experience. During the search, a number of parameters are taken into consideration such as Genre, Language, Country of Origin, Actors, Rating, and so on.

In this blog, we are going to design a proof of concept movie recommendation system using Open AI APIs and the Knowledge Graph FalkorDB.

Knowledge Graph: A Brief Introduction

A Knowledge Graph is a versatile way to store large amounts of information in a structured manner. Any large document corpus can be converted into a Knowledge Graph in the following manner.

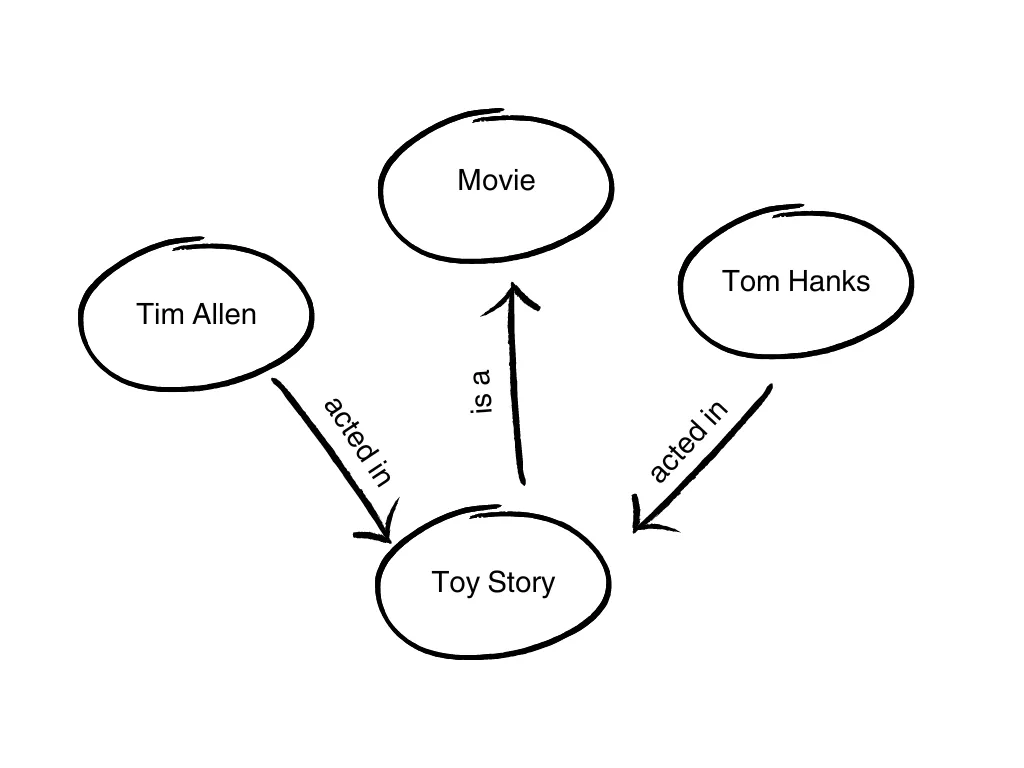

Imagine a board where you want to stick notes about different ideas. Each note has an identity — like the name of a person, a place, or a thing. The notes are also interconnected with each other through strings to show how they are related to each other. For example, a note about the actor ‘Tom Hanks’ is connected to the movie ‘Toy Story’ with the relationship ‘acted in’.

A Knowledge Graph is like this board with notes and strings. It’s a way to store information so that a system can understand and use it. In a Knowledge Graph, the notes are technically referred to as ‘nodes’ while the strings are called ‘edges’.

This way, when you ask the system a question like, ‘Who are the actors in Toy Story?, it can look at the Knowledge Graph, see the connections, and tell you the answer.

In contrast to vector databases, which is another popular method of storing information and performing similarity searches, Knowledge Graphs provide a more structured approach to storing knowledge. This is because vector databases can solely provide recommendations based on semantic similarity between the data; hence the responses are broad and more generic. A Knowledge Graph, on the other hand, can dig out complicated relationships between various entities and provide a more detailed response.

For example, if our query was ‘Suggest films directed by James Cameron, in which Leonardo DiCaprio starred, and which grossed over 500 million dollars’, a vector DB would perform similarity search on this query and give us films directed by James Cameron, and films in which Leonardo DiCaprio starred, and films which grossed over 500 million dollars because all of this is semantically similar to the query. A Knowledge Graph, leveraging the power of its interconnectedness, would find us the exact film we are looking for — ‘Titanic!’

Building a Movie Recommendation System with FalkorDB

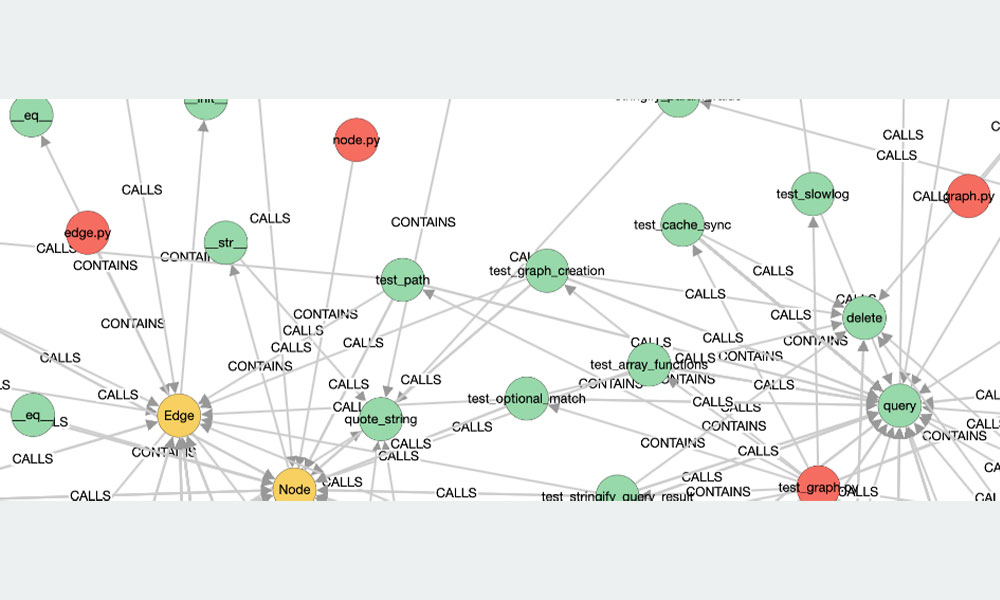

FalkorDB is a graph database that has been developed to provide a fast, scalable, and reliable solution for managing graph data. It is known for its low latency and high performance, making it a suitable choice for applications where these attributes are critical. FalkorDB is a successor to RedisGraph and aims to continue and expand on the capabilities that RedisGraph provided. It’s designed to handle a wide range of graph-related tasks and is adaptable to various use cases, including those that require handling complex graph structures.

In this article, we will use FalkorDB to create a simple demo of a movie recommendation system. This application will use IMDB’s top 1000 movies dataset. Additionally, we will generate synthetic data of user preferences, and try to see if we can infer what a user would like based on movie preferences of other users with similar demographics. Also, we will use OpenAI as our LLM.

Setting Up the Environment and Code

Create a virtual environment

python3.10 -m venv .venv

source .venv/bin/activate

Start the FalorDB server

docker run -p 6379:6379 -it - rm falkordb/falkordb

The above command will run an instance of FalkorDB using the latest docker image, on localhost:6379.

Dataset: We’ll be using the IMDB Movies Dataset, which is a dataset of top 1000 movies and TV shows. The content of the dataset is as follows:

- Poster_Link — Link of the poster that IMDB is using.

- Series_Title — Name of the movie.

- Released_Year — Year in which that movie was released.

- Certificate — Certificate earned by the movie.

- Runtime — Total runtime of the movie.

- Genre — Genre of the movie.

- IMDB_Rating — Rating of the movie on the IMDB site.

- Overview — Mini story/ summary.

- Meta_score — Score earned by the movie.

- Director — Name of the director.

- Star1, Star2, Star3, Star4 — Names of the stars.

- No_of_votes — Total number of votes.

- Gross — Money earned by the movie.

Install the following dependencies first.

%pip install langchain langchain-openai falkordb langchain-experimental pandas gradio

Set up your OpenAI API key:

import os

import sys

import logging

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

logging.basicConfig(stream=sys.stdout, level=logging.INFO)

Now we will set up a FalkorDB graph object in LangChain.

from langchain_community.graphs import FalkorDBGraph

from langchain_community.document_loaders.csv_loader import CSVLoader

import os

import pandas as pd

graph = FalkorDBGraph(database="imdb")

filename = os.path.join(os.getcwd(), 'imdb_top_1000.csv')

data = pd.read_csv(filename)

Then we’ll create a Cypher statement to insert data into FalkorDB.

A Cypher statement is a query or command written in Cypher, which is a declarative graph query language used by graph databases. Cypher is designed to allow users to efficiently and intuitively query, update, and administer the graph data. A Cypher statement typically specifies patterns of nodes and relationships in the graph database to create, modify, or retrieve data.

For example, a Cypher query to find a person named “John” in the database might look like `MATCH (person:Person {name: “John”}) RETURN person`.

Let’s write a Cypher statement to create the Movie nodes, Director nodes, connect them with a DIRECTED_BY edge -.

We will run this Cypher query against our LangChain graph object.

In the code below we are creating a movie node, then a director node, and then establishing the relationship between these two nodes.

Finally we insert into the graph db using MERGE statements. We do this individually for all the movies in the dataset.

import os

import pandas as pd

# Define a function to clean up each column

def clean_column(column_data):

return column_data.apply(lambda x: x.replace("'", "\\'") if pd.notnull(x) else 'NA')

# Assume 'data' is already defined and loaded with pd.read_csv()

# Apply the cleanup function to all necessary columns

data['Series_Title'] = clean_column(data['Series_Title'])

data['Director'] = clean_column(data['Director'])

data['Certificate'] = clean_column(data['Certificate'])

data['Runtime'] = clean_column(data['Runtime'])

data['Genre'] = clean_column(data['Genre'])

data['IMDB_Rating'] = data['IMDB_Rating'].fillna('NA')

data['Released_Year'] = pd.to_numeric(data['Released_Year'], errors='coerce').fillna(-1)

# Clean the star columns

star_columns = ['Star1', 'Star2', 'Star3', 'Star4']

for column in star_columns:

data[column] = clean_column(data[column])

# Now, iterate through the DataFrame and construct the graph

for index, row in data.iterrows():

# Directly use the cleaned data

movie_title = row['Series_Title']

director_name = row['Director']

certificate = row['Certificate']

runtime = row['Runtime']

genre = row['Genre']

imdb_rating = row['IMDB_Rating']

released_year = int(row['Released_Year']) if row['Released_Year'] != -1 else 'NA'

# Skip the row if the year is invalid

if released_year == 'NA':

print(f"Skipping row {i} due to invalid year.")

continue

# Create or merge the Movie node

movie_node = (

f"MERGE (m:Movie {{"

f"title: '{movie_title}', "

f"year: {released_year}, "

f"certificate: '{certificate}', "

f"runtime: '{runtime}', "

f"genre: '{genre}', "

f"imdb_rating: {imdb_rating}"

f"}})"

)

# Create or merge the Director node

director_node = f"MERGE (d:Director {{name: '{director_name}'}})"

directed_by_relation = f"MERGE (d)-[:DIRECTED]->(m)"

# Create or merge the Star nodes and their relationships

star_nodes = ""

starred_in_relations = ""

for s, star in enumerate(star_columns, start=1):

star_name = row[star]

if star_name != 'NA':

star_nodes += f"MERGE (s{s}:Star {{name: '{star_name}'}})\n"

starred_in_relations += f"MERGE (s{s})-[:STARRED_IN]->(m)\n"

cypher_query = (

f"{movie_node} {director_node} {directed_by_relation}"

f" {star_nodes.strip()}\n{starred_in_relations.strip()}"

)

# Print the Cypher query

print(cypher_query)

graph.query(cypher_query)

# It might be better to refresh the schema once after all operations to optimize performance

graph.refresh_schema()

print("Added graph documents to FalkorDB")

You will see the following output.

MERGE (m:Movie {title: 'Mou gaan dou', year: 2002, certificate: 'UA', runtime: '101 min', genre: 'Action, Crime, Drama', imdb_rating: 8.0}) MERGE (d:Director {name: 'Andrew Lau'}) MERGE (d)-[:DIRECTED]->(m) MERGE (s1:Star {name: 'Alan Mak'})

MERGE (s2:Star {name: 'Andy Lau'})

MERGE (s3:Star {name: 'Tony Chiu-Wai Leung'})

MERGE (s4:Star {name: 'Anthony Chau-Sang Wong'})

MERGE (s1)-[:STARRED_IN]->(m)

MERGE (s2)-[:STARRED_IN]->(m)

MERGE (s3)-[:STARRED_IN]->(m)

MERGE (s4)-[:STARRED_IN]->(m)

MERGE (m:Movie {title: 'Pirates of the Caribbean: The Curse of the Black Pearl', year: 2003, certificate: 'UA', runtime: '143 min', genre: 'Action, Adventure, Fantasy', imdb_rating: 8.0}) MERGE (d:Director {name: 'Gore Verbinski'}) MERGE (d)-[:DIRECTED]->(m) MERGE (s1:Star {name: 'Johnny Depp'})

MERGE (s2:Star {name: 'Geoffrey Rush'})

MERGE (s3:Star {name: 'Orlando Bloom'})

MERGE (s4:Star {name: 'Keira Knightley'}) MERGE (s1)-[:STARRED_IN]->(m)

MERGE (s2)-[:STARRED_IN]->(m)

MERGE (s3)-[:STARRED_IN]->(m)

MERGE (s4)-[:STARRED_IN]->(m)

Next, we will add randomly generated user preference data into the Knowledge Graph.

This is to simulate a real-life scenario where the opinions of users (for example, their likes or dislikes towards a particular genre) will be taken into consideration to provide recommendations.

Typically, in real-life settings, you will use actual data for user preferences. In our demo use case, we will also add demographic properties like ‘location’, ‘gender’ and ‘birth year’ for every user, which will allow us to do nuanced queries.

In a first for loop we use MERGE statements to create Person nodes. Then in a second for loop we use the MATCH statement to refer to the already indexed Movie and Person nodes, so that we can create a relationship between the two.

import random

import pandas as pd

# Assume 'data' is already loaded with pd.read_csv()

movie_names = data['Series_Title'].tolist().replace("'", "\\'")

# Demographic data ranges and options

birth_years_range = (1950, 2005)

genders = ["Male", "Female", "Other"]

locations = ["USA", "UK", "Canada", "Australia", "France", "Germany", "India", "Japan"]

num_persons = 100

num_data_points = 1000

opinions = ["liked", "loved", "ignored", "disliked", "hated"]

#First creating Person Nodes

for i in range(1, num_persons + 1):

person_name = f"Person {i}"

birth_year = random.randint(*birth_years_range)

gender = random.choice(genders)

location = random.choice(locations)

person_node = f"CREATE (:Person {{name: '{person_name}', birthYear: {birth_year}, gender: '{gender}', location: '{location}'}})"

graph.query(person_node)

#Then Matching Person nodes with Movie nodes at random

for _ in range(num_data_points):

person_index = random.randint(1, num_persons)

person_name = f"Person {person_index}"

opinion_edge = random.choice(opinions).upper()

movie_name = random.choice(movie_names)

opinion_query = f"MATCH (m:Movie {{title: '{movie_name}'}}), (p:Person {{name: '{person_name}'}})"

opinion_query += f" MERGE (p)-[:{opinion_edge}]->(m)"

graph.query(opinion_query)

graph.refresh_schema()

print("Added graph documents to FalkorDB")

MERGE (:Person {name: 'Person 1', birthYear: 1987, gender: 'Other', location: 'Japan'})

MERGE (:Person {name: 'Person 2', birthYear: 1968, gender: 'Female', location: 'Canada'})

MERGE (:Person {name: 'Person 24', birthYear: 1962, gender: 'Other', location: 'India'})

MERGE (:Person {name: 'Person 25', birthYear: 1952, gender: 'Female', location: 'USA'})

...

MATCH (m:Movie {title: 'The Sixth Sense'}), (p:Person {name: 'Person 17'}) MERGE (p)-[:LOVED]->(m)

MATCH (m:Movie {title: 'Deliverance'}), (p:Person {name: 'Person 46'}) MERGE (p)-[:LOVED]->(m)

MATCH (m:Movie {title: 'Rang De Basanti'}), (p:Person {name: 'Person 44'}) MERGE (p)-[:LIKED]->(m)

Added graph documents to FalkorDB

Now let’s stitch together our RAG pipeline using LangChain.

We will use FalkorDBQAChain(), which allows us to use the Knowledge Graph provided by FalkorDB in conjunction with our LLM.

Note that the LLM is serving as our user interface, but the context data is actually fetched from the Knowledge Graph.

Here’s how it works under the hood:

- User enters a natural language query.

- The LLM translates that query into a Cypher query, which is used to query the Knowledge Graph.

- The response provided by the Knowledge Graph is then used by the LLM to form the final response to the user.

In the outputs below, note the sections before the finished chain– this will give you an insight into the cypher query generated, and the Knowledge Graph output. This can help with debugging in case you are not getting the results you are seeking.

Now, let’s run some queries on our Knowledge Graph. We will use ‘verbose=True’ to understand the Cypher query generated and the output.

from langchain_openai import ChatOpenAI

from langchain.chains import FalkorDBQAChain

chain = FalkorDBQAChain.from_llm(ChatOpenAI(temperature=0), graph=graph, verbose=True)

out1 = chain.run("Which movies did Christopher Nolan direct?")

print(out1)

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (d:Director {name: 'Christopher Nolan'})-[:DIRECTED]->(m:Movie)

RETURN m.title

Full Context:

[['The Dark Knight'], ['Inception'], ['Interstellar'], ['The Prestige'], ['The Dark Knight Rises'], ['Memento'], ['Batman Begins'], ['Dunkirk']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

Christopher Nolan directed several movies, including "The Dark Knight," "Inception," "Interstellar," "The Prestige," "The Dark Knight Rises," "Memento," "Batman Begins," and "Dunkirk."

out = chain.run("Who starred in Lifeboat?")

print(out)

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (s:Star)-[:STARRED_IN]->(m:Movie)

WHERE m.title = 'Lifeboat'

RETURN s.name

Full Context:

[['Tallulah Bankhead'], ['John Hodiak'], ['Walter Slezak'], ['William Bendix']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

The cast of Lifeboat includes Tallulah Bankhead, John Hodiak, Walter Slezak, and William Bendix.

out1 = chain.run("Which directors does Person 2 love?")

print(out1)

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person {name: 'Person 2'})-[:LOVED]->(m:Movie)<-[:DIRECTED]-(d:Director)

RETURN d.name

Full Context:

[['Yoshiaki Kawajiri'], ['Alfred Hitchcock'], ['Ashutosh Gowariker'], ['Anders Thomas Jensen'], ['Georges Franju'], ['Joel Coen'], ['Richard Linklater'], ['Michael Haneke'], ['Sam Mendes'], ['Clint Eastwood']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

Person 2 loves Alfred Hitchcock.

Now let’s try a query which uses user demographic information.

out1 = chain.run("Which movies did person born in year 1975 like?")

print(out1)

out2 = chain.run("Which movies should person born in year 1967 watch?")

print(out2)

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person {birthYear: 1975})-[:LIKED]->(m:Movie)

RETURN m.title

Full Context:

[['Ford v Ferrari'], ['Bound by Honor'], ['Logan'], ['The Maltese Falcon'], ['The Others']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

The person born in 1975 liked the movies "Ford v Ferrari," "Bound by Honor," "Logan," "The Maltese Falcon," and "The Others."

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person {birthYear: 1967})-[:LIKED|LOVED]->(m:Movie)

RETURN m.title

Full Context:

[['La haine'], ['Stand by Me'], ['The Dark Knight'], ['Rang De Basanti'], ['Empire of the Sun'], ['Creed']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

A person born in 1967 should consider watching the following movies: "La haine," "Stand by Me," "The Dark Knight," "Rang De Basanti," "Empire of the Sun," and "Creed." These movies offer a diverse range of genres and storytelling styles that can cater to different preferences. Enjoy the cinematic journey!

out1 = chain.run("Which films should person born in year 1972 not watch?")

print(out1)

Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person {birthYear: 1972})-[:HATED|IGNORED|DISLIKED]->(m:Movie)

RETURN m.title

Full Context:

[['Letters from Iwo Jima'], ['The Conversation'], ['Jûbê ninpûchô'], ['Portrait de la jeune fille en feu'], ['Serpico'], ["Singin' in the Rain"], ['Wonder'], ['Patton'], ['The Count of Monte Cristo']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

A person born in 1972 should not watch the following films: "Letters from Iwo Jima," "The Conversation," "Jûbê ninpûchô," "Portrait de la jeune fille en feu," "Serpico," "Singin' in the Rain," "Wonder," "Patton," and "The Count of Monte Cristo.

out1 = chain.run("Which person likes the director Christopher Nolan's films?")

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person)-[:LIKED]->(:Movie)<-[:DIRECTED]-(d:Director {name: 'Christopher Nolan'})

RETURN p.name

Full Context:

[['Person 9']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

out1 = chain.run("Where do people who like the director Martin Scorsese live?")

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person)-[:LIKED]->(:Movie)<-[:DIRECTED]-(d:Director {name: 'Martin Scorsese'})

RETURN p.location

Full Context:

[['France'], ['USA'], ['France']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

out1 = chain.run("What is the gender distribution of people who love films?")

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person)-[:LOVED]->(m:Movie)

RETURN p.gender, COUNT(*) as count

Full Context:

[['Male', 69], ['Other', 70], ['Female', 79]]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

out1 = chain.run("Who are the people that liked Action, Adventure films?"

> Entering new FalkorDBQAChain chain...

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

Generated Cypher:

MATCH (p:Person)-[:LIKED]->(m:Movie)

WHERE m.genre CONTAINS 'Action' AND m.genre CONTAINS 'Adventure'

RETURN p.name

Full Context:

[['Person 36'], ['Person 49'], ['Person 56'], ['Person 96'], ['Person 64'], ['Person 47'], ['Person 49'], ['Person 72'], ['Person 16'], ['Person 5']]

INFO:httpx:HTTP Request: POST https://api.openai.com/v1/chat/completions "HTTP/1.1 200 OK"

> Finished chain.

We also created a Gradio interface to query the knowledge graph. A Gradio interface is a web-based UI component that allows users to interact with machine learning models and functions by providing inputs and displaying outputs.

import gradio as gr

# Assuming your FalkorDBQAChain setup code is correctly initialized here

def ask_question(question):

# Your function to run the question through the chain and return the output

output = chain.run(question)

return output

# Corrected Gradio interface setup

iface = gr.Interface(fn=ask_question,

inputs=gr.Textbox(lines=2, placeholder="Enter your question here..."),

outputs=gr.Textbox(lines=10, label="Output"), # Increased the lines for the output

title="FalkorDB QA System",

description="Ask any question related to the movie database.")

# Launch the Gradio app

iface.launch(share=True)

Using Open-Source LLMs Instead of OpenAI

Now, what if you want to achieve the same RAG pipeline as above with open-source LLMs like variants of Mistral or Llama2?

Since we are using LangChain, it is actually really easy to replace the LLM argument in the function FalkorDBQAChain.from_llm() with an open-source one.

However, to do so and get similar results, you would need to customize the prompt that is used for Cypher generation. In order to control that, you can use an additional argument in the FalkorDBQAChain.from_llm function:

cypher_prompt: "[prompt template for cypher generation]"

To deep dive into this, you can check this. Or, better, wait for my next article.

Conclusion

In this blog, we demonstrated a movie recommendation system by introducing Knowledge Graphs as a powerful tool for representing interconnected information. The implementation of FalkorDB, in conjunction with Langchain and Open AI APIs, provides a practical demonstration of how to build a movie recommendation system that can handle complex relationships and deliver tailored suggestions. You can create your own Knowledge Graphs for your unique datasets, and then power it with an LLM to enable generative question-answer capabilities.